Testing a data mapping workflow

The extraction workflow is always performed on the current record in the data source. When an error is encountered, the extraction workflow stops, and the data field on which the error occurred and all data fields related to subsequent steps will be grayed out. Click the Messages tab (next to the Step properties pane) to see any error messages.

Note: At design time, steps automatically error out after the timeout value has been reached that is set in the preferences. This does not apply to the Preprocessor and Postprocessor steps and when setting boundaries. See Common DataMapper preferences.

Validating records

To test the extraction workflow on all records that are displayed, and to see how the steps perform, you can either:

-

Click the Validate All Records toolbar button.

-

Select Data > Validate Records in the menu.

Note: How many records are displayed in the Data Viewer (200, by default) is specified in the Record limit on the Settings pane. Temporarily increase or remove the limit for more accurate validation measurements.

If any errors are encountered in one or more records, an error message will be displayed. Errors encountered while performing the extraction workflow on the current record will also be visible on the Messages tab.

Performance measurements

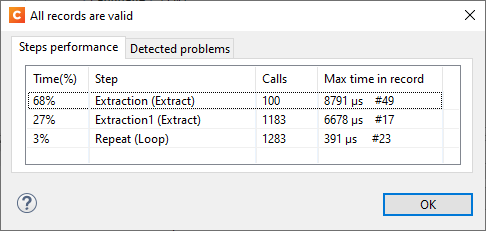

If no errors were encountered while validating the records, information about the performance of each step is displayed in a grid. These metrics help you identify which steps are consuming the most time, so that you can review them and improve overall performance.

The steps are ordered from slow to fast.

-

Time(%): The execution time (as a percentage) for a single step relative to all steps together.

Note: For a Loop/Condition/Multiple Condition step, only the time it takes to validate the condition is measured, not the time the branch execution takes. The performance of each step in a branch is measured on its own.

-

Step: The step name (and its type).

-

Calls: The total number of times the step has run.

-

Max time in record: The maximum amount of time (in microseconds) the step took, and in which record that was.

Note: The performance of the Preprocessor and Postprocessor steps is not measured.

Performance issues

The following issues could negatively impact the performance of a data mapping workflow.

-

Boundaries are configured in a way that a single data record is produced.

Having the contents of an entire data file in a single record can cause slowdowns or even an out of memory issue when the data file turns out to be too large for a single record. -

There are more than 3 levels of nested tables in a detail table.

Each detail table level reduces the overall performance. -

A single record contains more than 30,000 extracted data fields.

Having more than 30,000 extraction fields in a single record (including detail tables) can slow down the data mapping and content creation. -

The source is a text file and one source data record contains more than 30MB of data.

Too much data in a single source record can slow down the data mapping.

If any of these issues is encountered in the current data mapping workflow it is listed on the Detected problems tab.

Improving the performance

Here are some things you can do to improve the performance of the data mapping.

-

Change the boundary settings to increase the number of records detected in the source file. This reduces the size of a single record and the number of extractions per record and has a positive effect on the performance of both data mapping and content creation.

-

Reduce the number of extracted data fields by extracting multiple values into a single data field of type JSON.

-

If document logic permits, try to avoid nesting detail tables at more than 3 levels.